🔗 Official Website

Trends

Description

Introduce

MovieChat aims to address the challenges of computational complexity, memory cost, and long-term temporal connections for long videos.

Abstract

Recently, integrating video foundation models and large language models to build a video understanding system overcoming the limitations of specific pre-defined vision tasks. Yet, existing systems can only handle videos with very few frames. For long videos, the computation complexity, memory cost, and long-term temporal connection are the remaining challenges. Inspired by Atkinson-Shiffrin memory model, we develop an memory mechanism including a rapidly updated short-term memory and a compact thus sustained long-term memory. We employ tokens in Transformers as the carriers of memory. MovieChat achieves state-of-the-art performace in long video understanding.

Overview

How MovieChat Works

- Mainly based on the inspiration of the Atkinson-Shiffrin memory model, a memory mechanism including a fast-updating short-term memory and a compact long-term memory is proposed.

- Short-term memory is designed to update quickly and can be understood as the memory of recent events in a video, which is quickly updated as new events occur.

- Long-term memory is more compact, storing key information from videos that remains unchanged over long periods of time.

- In the Transformer model, tokens are used as memory carriers. This means that each token can be regarded as a memory unit, which stores a certain part of the information in the video. In this way, MovieChat can efficiently manage and utilize memory resources when processing long videos.

- The MovieChat framework consists of a visual feature extractor, short- and long-term memory buffers, a video projection layer, and a large language model. Visual feature extraction is done using pre-trained models such as ViT-G/14 and Q-former. These visual features are extracted and then transformed into a form that can be processed by a large language model through a video projection layer.

working principle of MovieChat

The working principle of MovieChat mainly includes the following steps:

1. Preprocessing: First, the video is cut into a series of segments, and each segment is encoded to obtain the feature representation of each segment.

2. Memory management: These feature representations are then stored in memory. As new video clips are processed, memory is updated, old information is gradually forgotten, and new information is stored in memory.

3. Question answering: When a question is received, MovieChat will generate an answer based on the question and the information in memory. This process is done with a Transformer model that can process long sequences and generate responses accordingly.

MovieChat can handle over 10K frames of video on a 24GB graphics card. MovieChat outperforms other methods by a factor of 10,000 in terms of average increase in GPU memory cost per frame (21.3KB/f to ~200MB/f).

Features and Benefits

- Mainly based on the inspiration of the Atkinson-Shiffrin memory model, a memory mechanism including a fast-updating short-term memory and a compact long-term memory is proposed.

- Short-term memory is designed to update quickly and can be understood as the memory of recent events in a video, which is quickly updated as new events occur.

- Long-term memory is more compact, storing key information from videos that remains unchanged over long periods of time.

- In the Transformer model, tokens are used as memory carriers. This means that each token can be regarded as a memory unit, which stores a certain part of the information in the video. In this way, MovieChat can efficiently manage and utilize memory resources when processing long videos.

Open Source

Tags

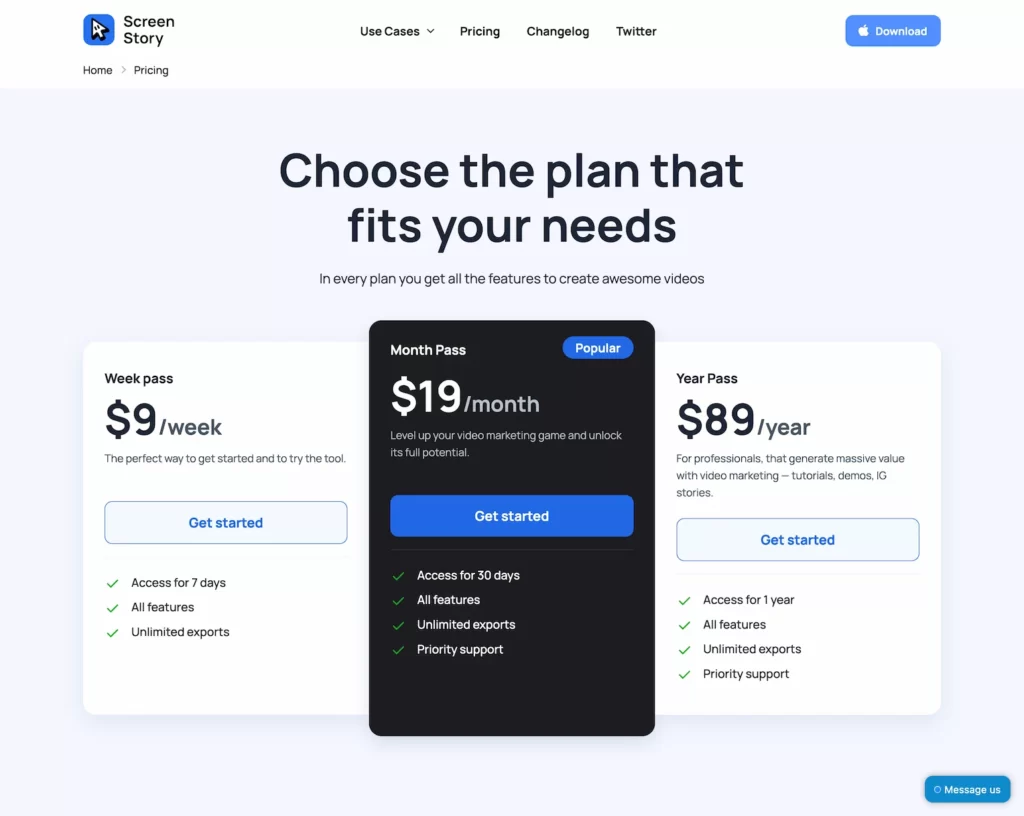

Compare with other popular AI Tools

Compare with Runway

Compare with InVideo-Text prompts to Video

Compare with Eightify-YouTube Video AI Summaries

Compare with Speechify-AI text-to-speech

Compare with simplified

Compare with Opus.pro

Compare with HeyGen-Free AI Video Generator

Compare with FlexClip-AI video editor and maker

Compare with Vidnoz AI-Free Al Video Creator