🔗 Official Website

Trends

Description

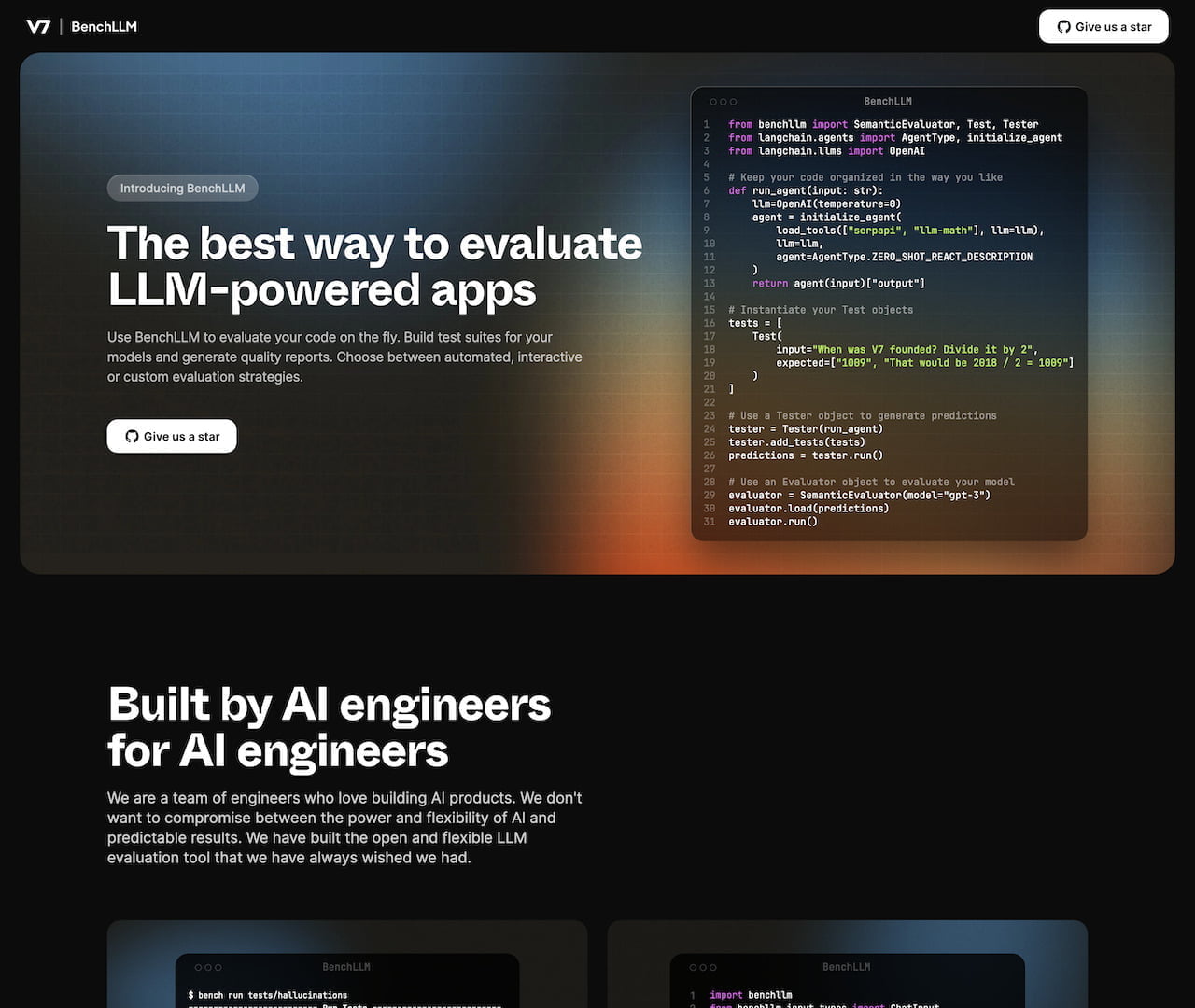

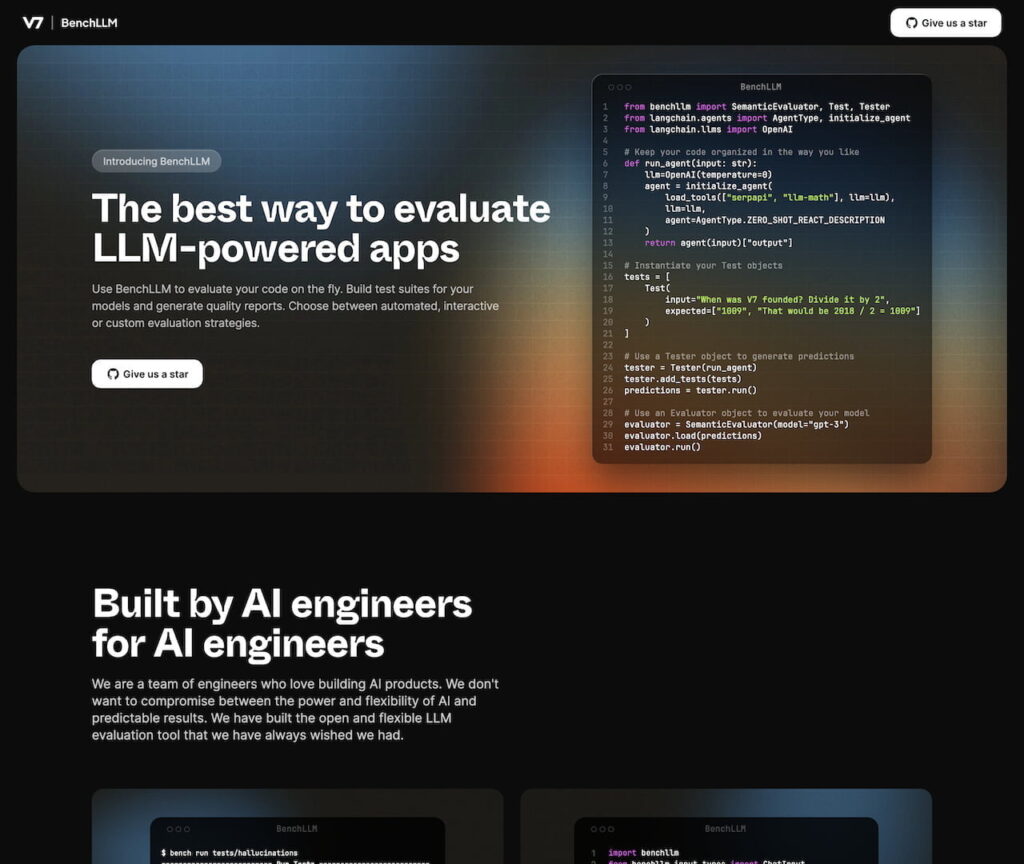

BenchLLM - Evaluate AI Products

BenchLLM is a powerful tool designed for testing and evaluating Large Language Models (LLMs), chatbots, and AI-powered applications. It offers the ability to evaluate code on the fly, create test suites for models, and generate quality reports . As an open-source tool, BenchLLM allows users to automate evaluations and benchmark different models . The tool is Python-based and streamlines the testing process for LLMs and AI applications .

By using BenchLLM, developers can simplify and enhance the testing of their AI products, making it an essential resource in the field of AI development.

Insights

BenchLLM appears to be a valuable resource for developers working with AI products, especially those involving Large Language Models. The tool's ability to automate evaluations and benchmark models can save developers time and effort during the testing phase of their projects. Additionally, its open-source nature encourages collaboration and community-driven improvements. This kind of tool is crucial in ensuring the reliability and performance of AI applications, especially as the field continues to grow and expand. Developers should consider exploring BenchLLM for testing-driven development and quality assurance of their AI-powered solutions.