VideoReTalking-focuses on audio-based lip synchronization for talking head video editing

🔗 Official Website

Trends

Description

VideoReTalking focuses on audio-based lip synchronization for talking head video editing

Here's a brief overview of the information available:

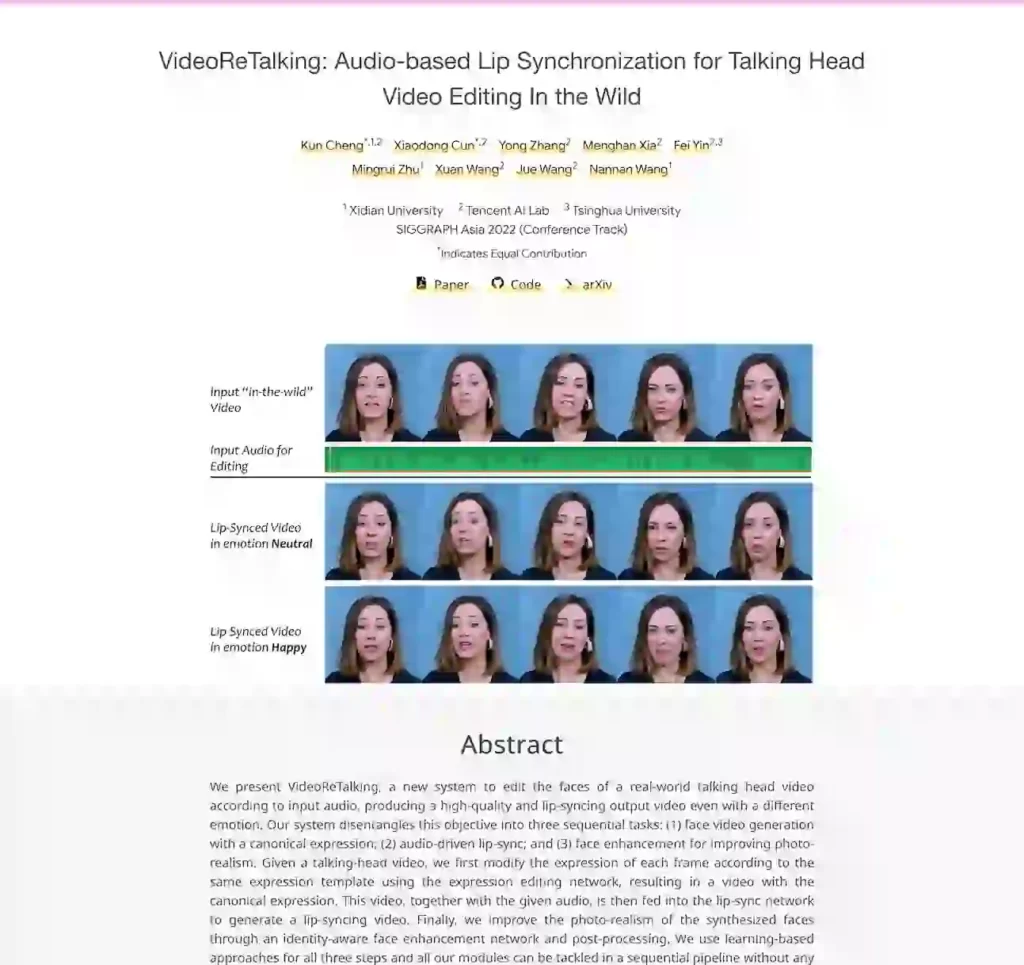

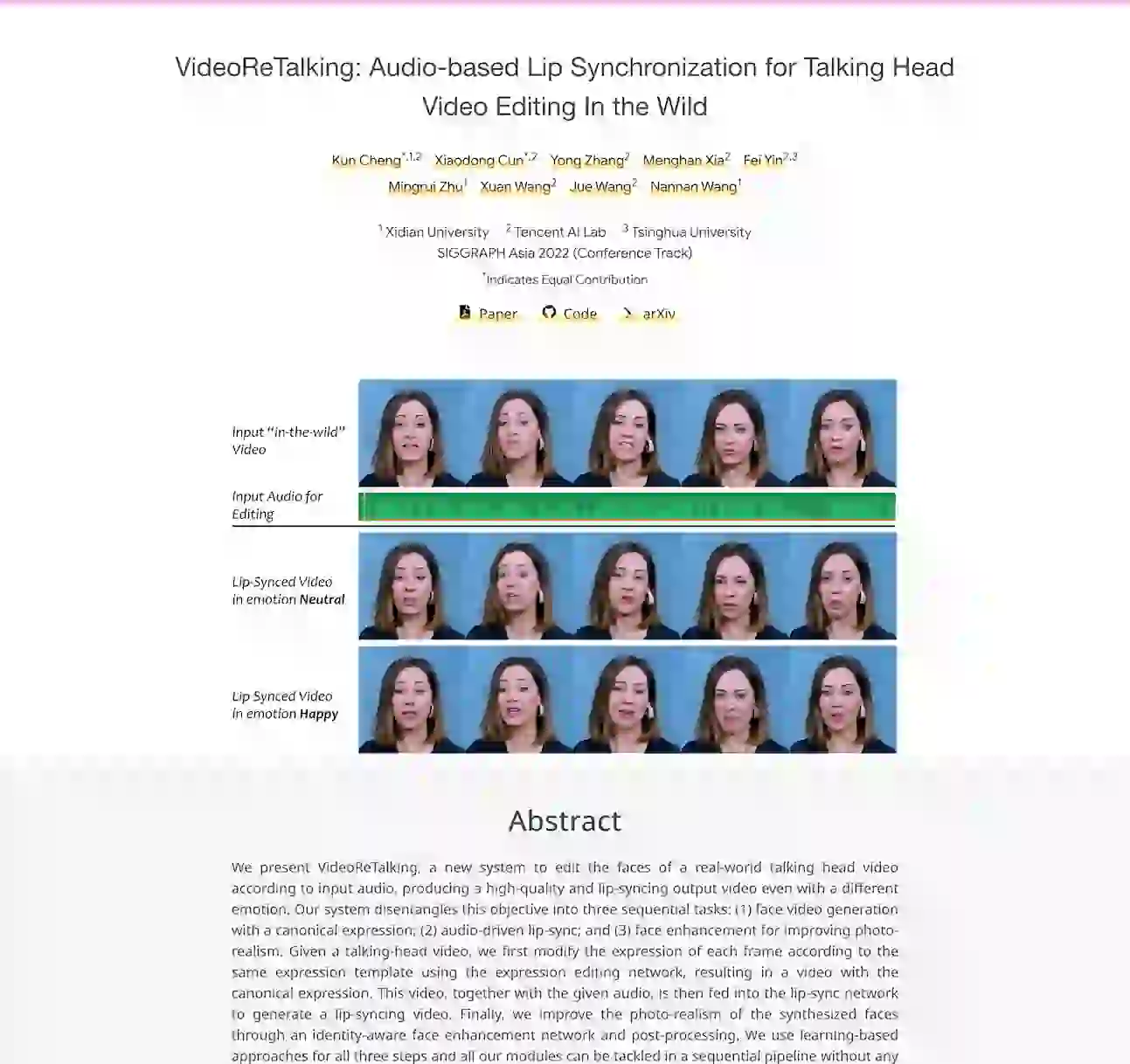

VideoReTalking: Audio-based Lip Synchronization for Talking Head Video Editing In the Wild

- This is a project that aims to achieve lip synchronization in talking head videos based on audio input. It seems to focus on editing such videos in real-world scenarios .

GitHub Repository for VideoReTalking

- The project has a GitHub repository where you can access its source code and related resources. The repository includes code for various components, such as web UI, inference, and quick demo .

Web UI Component

- There is a webUI.py file within the GitHub repository, which appears to be related to the project's web user interface for lip synchronization in talking head videos .

Quick Demo

- The project provides a quick demo notebook (quick_demo.ipynb) that you can access from the GitHub repository. This notebook may contain examples and instructions on how to use the VideoReTalking system .

Inference Component

- The GitHub repository also includes an inference.py file, which may be related to the inference process for lip synchronization using the VideoReTalking system. It seems to involve libraries like OpenCV, NumPy, and Torch .

Based on these search results, VideoReTalking is a project that focuses on audio-based lip synchronization for talking head video editing. It provides source code, a web user interface, and a quick demo for users interested in exploring and utilizing this technology. For more detailed information and usage instructions, you can refer to the provided GitHub repository and associated resources.

VideoReTalking: Synchronize the mouth shape of the characters in the video with the input voice.

You only need to input any video and an audio file, and it will generate a new video for you, in which the character's mouth shape will be synchronized with the audio. VideoReTalking can not only synchronize the mouth shape with the voice, but also change the expression of the characters in the video according to the voice. The entire process does not require user intervention and is completed automatically.

work process:

The workflow of the entire system is divided into three main steps: facial video generation, audio-driven mouth synchronization, and facial enhancement. All these steps are based on learning methods and can be completed in a sequential process without user intervention.

1. Facial video generation: First, the system will use the expression editing network to modify the expression of each frame so that it matches a standard expression template, thereby generating a video with standard expressions.

2. Audio-driven lip synchronization: Then, this video and the given audio are input into the lip synchronization network to generate a video in which the mouth shape is synchronized with the audio.

3. Face enhancement: Finally, the system improves the photo authenticity of the synthesized face through an identity-aware face enhancement network and post-processing.

Download Link

Projects and demos: https://opentalker.github.io/video-retalking/

Paper: https://arxiv.org/abs/2211.14758

GitHub: https://github.com/OpenTalker/video-retalking

Colab online experience: https://colab.research.google.com/github/vinthony/video-retalking/blob/main/quick_demo.ipynb

The system is implemented using PyTorch, and each module is trained individually. The system was trained on the VoxCeleb dataset.

VoxCeleb is a large, diverse dataset of talking head videos. This dataset contains 22,496 videos of talking heads with different identities and head poses. This dataset was chosen to ensure that the model can handle a wide variety of talking head videos.

Through such a detailed and sophisticated training process, VideoReTalking successfully implemented a talking head video editing system capable of generating high-quality, lip-synchronized and audio-synchronized videos.

Video1: Video Results in the Wild.

VideoReTalking:動画内のキャラクターの口の形を入力音声と同期させます。

ビデオとオーディオ ファイルを入力するだけで、キャラクターの口の形がオーディオと同期した新しいビデオが生成されます。 VideoReTalkingは口の形と音声を同期させるだけでなく、音声に合わせて映像内のキャラクターの表情を変えることもできます。 プロセス全体はユーザーの介入を必要とせず、自動的に完了します。

作業過程:

システム全体のワークフローは、顔ビデオの生成、オーディオによる口の同期、顔の強調という 3 つの主要なステップに分かれています。 これらすべてのステップは学習方法に基づいており、ユーザーの介入なしで一連のプロセスで完了できます。

1. 表情ビデオの生成: まず、システムは表情編集ネットワークを使用して、標準表情テンプレートに一致するように各フレームの表情を変更し、それによって標準表情を持つビデオを生成します。

2. 音声駆動型リップシンク: 次に、このビデオと指定された音声がリップシンクネットワークに入力され、口の形状が音声と同期したビデオが生成されます。

3. 顔の強調: 最後に、システムは、アイデンティティを認識した顔の強調ネットワークと後処理を通じて、合成された顔の写真の信頼性を向上させます。

プロジェクトとデモ: https://opentalker.github.io/video-retalking/

論文: https://arxiv.org/abs/2211.14758

GitHub: https://github.com/OpenTalker/video-retalking

Colab オンライン体験: https://colab.research.google.com/github/vinthony/video-retalking/blob/main/quick_demo.ipynb

このシステムは PyTorch を使用して実装されており、各モジュールは個別にトレーニングされます。 このシステムは VoxCeleb データセットでトレーニングされました。

VoxCeleb は、トーキング ヘッド ビデオの大規模で多様なデータセットです。 このデータセットには、さまざまなアイデンティティと頭のポーズを持つトーキング ヘッドの 22,496 個のビデオが含まれています。 このデータセットは、モデルがさまざまなトーキング ヘッド ビデオを確実に処理できるようにするために選択されました。

このような詳細かつ洗練されたトレーニング プロセスを通じて、VideoReTalking は、高品質のリップシンクおよびオーディオ同期ビデオを生成できるトーキングヘッド ビデオ編集システムの実装に成功しました。

Features and Benefits

- This is a project that aims to achieve lip synchronization in talking head videos based on audio input. It seems to focus on editing such videos in real-world scenarios .

- The project has a GitHub repository where you can access its source code and related resources. The repository includes code for various components, such as web UI, inference, and quick demo .

- There is a webUI.py file within the GitHub repository, which appears to be related to the project's web user interface for lip synchronization in talking head videos .

- The project provides a quick demo notebook (quick_demo.ipynb) that you can access from the GitHub repository. This notebook may contain examples and instructions on how to use the VideoReTalking system .

Open Source

Tags

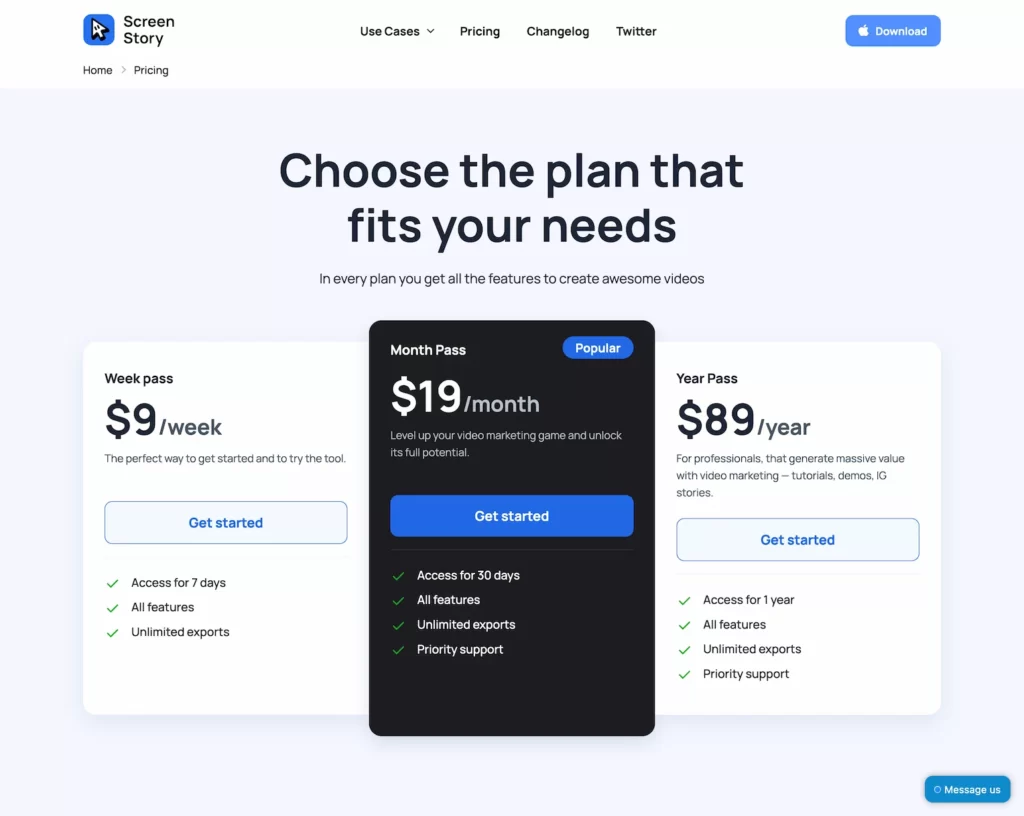

Compare with other popular AI Tools

Compare with Runway

Compare with InVideo-Text prompts to Video

Compare with Eightify-YouTube Video AI Summaries

Compare with Speechify-AI text-to-speech

Compare with simplified

Compare with Opus.pro

Compare with HeyGen-Free AI Video Generator

Compare with FlexClip-AI video editor and maker

Compare with Vidnoz AI-Free Al Video Creator