🔗 Official Website

Trends

Description

Riffusion - AI Music Generation

Riffusion is an innovative project created for real-time music generation using stable diffusion, an AI model.

This library and application allow users to generate music and audio by providing text prompts. The concept is centered around converting text into musical compositions, a process known as "Text-to-Music" (txt2music).

Riffusion stands out due to its unique and creative approach to AI-generated music, offering users a new way to interact with music production and explore various styles, instruments, genres, and sounds through text inputs.

Project features

The project's GitHub page provides more information about the library and its functionalities. Additionally, a Hugging Face model is available for users to try out Riffusion's capabilities. The project's official website is a platform to learn about the technology and its creators.

This AI-driven music composition tool has garnered attention for its novel approach and creative possibilities. It was covered in an article on TechCrunch, which highlighted Riffusion's unique approach to generating music. The project has also been shared on platforms like YouTube, where users can see the tool in action.

Riffusion's innovative blend of AI and music composition opens up exciting avenues for both music enthusiasts and technology enthusiasts alike. With its ability to translate text prompts into musical creations, Riffusion offers a fresh perspective on music generation, making it a noteworthy project in the realm of AI-driven creative applications.

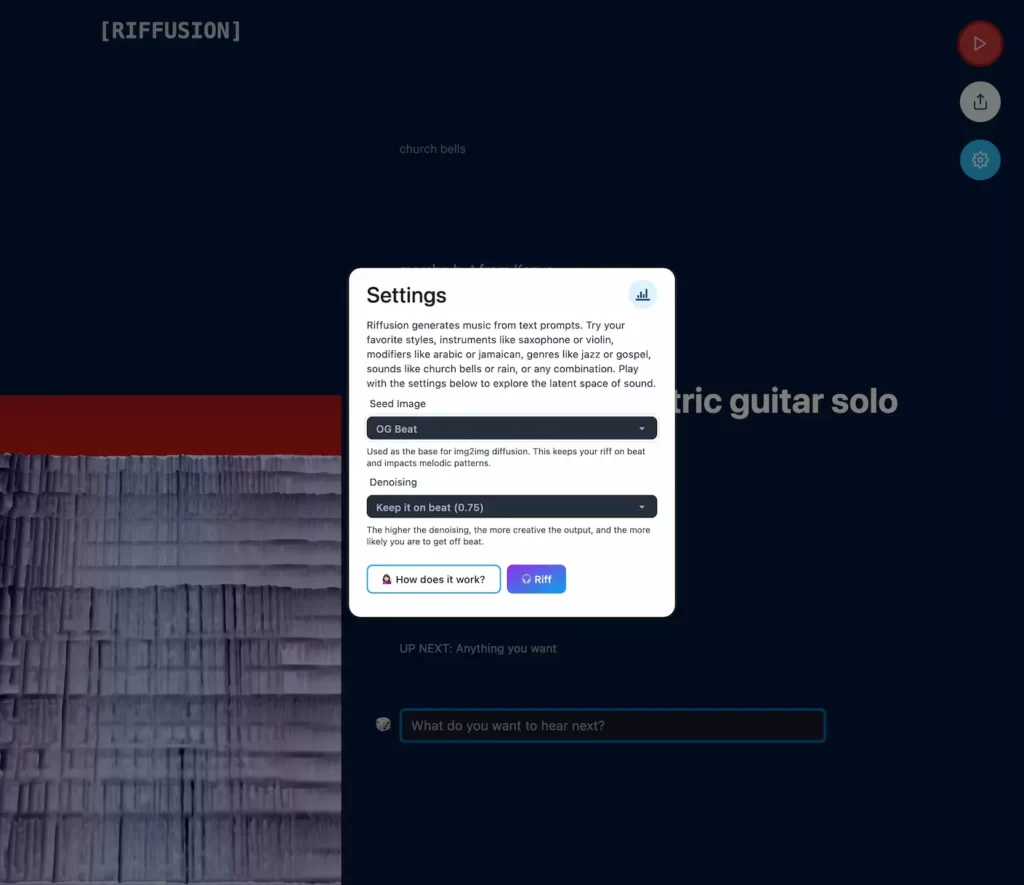

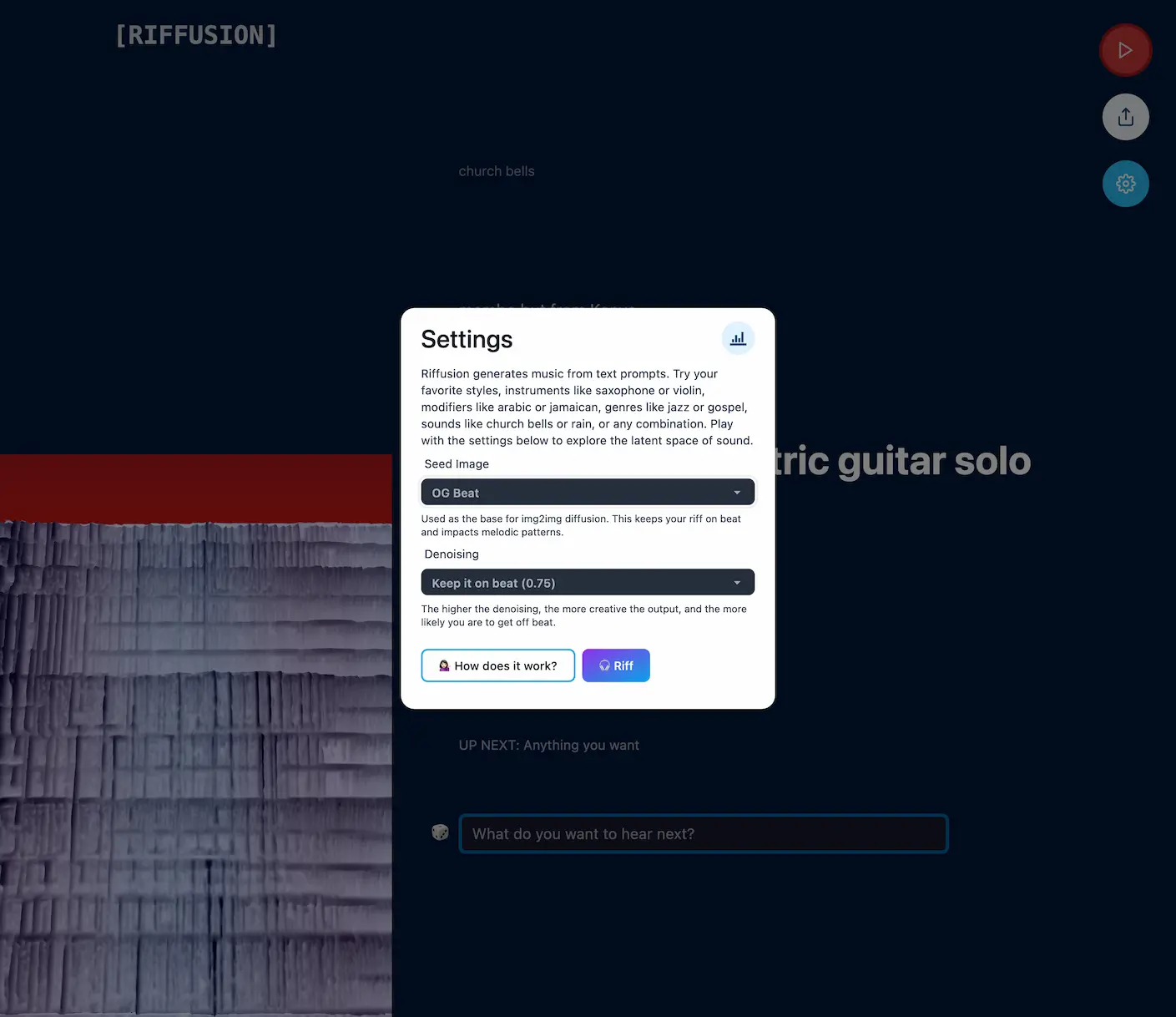

Riffusion generates music from text prompts. Try your favorite styles, instruments like saxophone or violin, modifiers like arabic or jamaican, genres like jazz or gospel, sounds like church bells or rain, or any combination. Play with the settings below to explore the latent space of sound.

Riffusion is a machine learning technology used to generate images. In the past year, this technology has made the AI intelligent world more powerful. DALL-E 2 and Stable Diffusion are the two most eye-catching items at present. Their working principle is to determine what content the input instructions should be replaced by visual noise through AI intelligence.

This set of methods has proved to be powerful in many cases and can be easily fine-tuned; in most well-trained models, input of a large amount of specific content can enable it to produce more derived results. For example, by fine-tuning the generation system on more watercolor paintings and pictures of cars, it can strengthen the ability of the system to generate these contents.

Seth Forsgren and Hayk Martiros made their Riffusion model by performing Stable Diffusion on the spectrum map. Stable Diffusion is a mathematical concept that refers to a diffusion process with a stable distribution over time. In the context of data analysis, it can be used to describe the propagation of information or the evolution of the system over time. Spectrum diagram is a graphical representation of the signal frequency spectrum over time, which is usually used for signal processing and audio analysis.

"Hayk and I formed a group with me and began to make this project just because we love music. I don't know if it is possible to create a spectrum with enough fidelity in this way to convert it into audio; every step of the process, we They are becoming more and more determined about the next possibility, and one idea leads to the next one. Seth Forsgren said.

What is the spectrum chart? They are the visual representation of audio, showing the amplitude of different frequencies over time. Perhaps you may have seen the waveforms, which show the volume over time, making the audio look like a series of hills and canyons; it can be imagined that it is not only the performance of the total volume, but also the volume of each frequency from low-end to high-end.

Forsgren and Martiros produced a series of music spectrum maps and marked related terms on the images, such as "Blues Guitar", "Jazz Piano", "African Rhythm" and so on. Enter this part of the data into the model, so that it has good concepts and judgment on what some sounds "looks like" and how to recreate or combine them.

At present, Riffusion is not technically mature enough to produce long content, which is currently valid in theory. Forsgren said that they have not officially tried to create classic 3-minute songs with repetitive melodies and lyrics. They believe that this can be achieved through some ingenious techniques, such as creating a higher-level model for the song structure, and then using a lower-level model to process a single fragment. . Or use a larger resolution full song image to train Riffusion's model in depth.

Riffusion is not any kind of grand plan to reshape music. Forsgren said that he and Martiros are just happy to see people participate in their work, build their own ideas on their code, have fun and iterate it. People can quickly build on the basis of things, and the direction is unpredictable by the original author.

At present, you can try this new AI model directly on Riffusion.com. All codes can be obtained on the About page, so if you are interested, you can join the Riffusion community at any time.

https://github.com/riffusion/riffusion

Open Source

Tags

Compare with other popular AI Tools

Compare with Voicemod-Free Real-Time Voice Changer for PC & Mac

Compare with Murf.ai-Text to Speech Software

Compare with D-ID AI Video Generator

Compare with Notta-Transcribe Audio to Text

Compare with Fliki.ai-Text to Video and Text to Speech

Compare with Play.ht-AI Voice Generator with 600+

Compare with HitPaw AI Video Enhancer

Compare with Lalal.ai-Vocal Remover & Instrumental AI Splitter

Compare with Captions.ai-Your AI-powered Creative Studio